Security Operations Centers confront an uncomfortable paradox: AI agents promise to automate threat response at machine speed, but CISOs are hesitant to deploy them. The reason isn’t a lack of capability. it’s a lack of visibility. When an AI agent autonomously blocks a user account, quarantines a system, or terminates a process, leadership asks a simple question: “Why did it do that?” If the answer is “the AI decided,” that’s not good enough.

Autonomy without auditability is a liability. The industry's rush to deploy autonomous AI SOC agents has created black box systems that lack transparency, exactly the kind of autonomous cyber investigations that CISOs can't confidently deploy. When SOC leaders need to justify incident response decisions to compliance teams and boards, these systems fail the operational test.

The Trust Problem: Why Speed Without Transparency Creates New Issues

The appeal of autonomous cyber investigations is obvious: threat actors move in minutes, but manual investigations take hours. An AI SOC agent that can detect, investigate, and respond to threats without human intervention promises to close that speed gap. But speed without transparency creates new vulnerabilities issues.

Consider what happens when an agent auto-remediates a false positive. A legitimate business process gets disrupted. Revenue is impacted. Users are locked out. And when the incident review happens, security leadership discovers they can’t trace the decision path that led to the action. There’s no audit trail showing what evidence the agent evaluated, what patterns it matched, or what alternative explanations it considered. The agent simply acted, and now the team is left reverse-engineering the decision from outcomes alone.

This creates a disconnect between what AI SOC vendors promise and what security organizations can actually accept. Some vendors in this segment tout “autonomous response” and “self-healing SOCs,” but CISOs face regulatory requirements, compliance audits, and board-level questions about security decisions. When a system operates as a black box, every decision becomes a trust exercise rather than a verifiable process.

The fundamental challenge lies in the architecture of most security AI systems. They’re designed for prediction and action, not explanation. The neural networks and machine learning models that power these agents optimize for accuracy, not interpretability. You get a confidence score and a recommended action, but the chain of reasoning that connects evidence to conclusion remains hidden inside the model. For SecOps AI explainability, this architecture is fundamentally inadequate.

Why Black Box SOC AI Agents Fail the Operational Test

The problem intensifies when you examine how SOC investigations actually work. SOC analyst work isn't linear; it's iterative. An analyst sees an alert, gathers initial evidence, forms a hypothesis, tests that hypothesis against additional data, and often pivots to entirely new investigative paths. This is the reality of complex cyber investigation work: you're not executing a predetermined script, you're adapting to threat actor behavior. This investigative flexibility is exactly what AI SOC agents can't replicate when they operate as black boxes.Black box agents can’t replicate this investigative methodology because they can’t expose their reasoning process. When an agent evaluates an authentication anomaly, it might scan dozens of data sources, correlate timestamps, analyze user behavior patterns, and compare against known attack signatures. But if the agent simply returns “high confidence malicious,” the analyst can’t verify that reasoning. They can’t identify gaps in the evidence collection. They can’t add environmental context the agent doesn’t know about. They can’t even determine if the agent checked the most relevant data sources.

This creates a false binary: either trust the agent completely or ignore it entirely. Both extremes introduce unacceptable risk:Complete trust means accepting outcomes you can’t verify, which introduces regulatory risk and operational blind spots. Complete distrust means the expensive AI system becomes irrelevant. Analysts bypass it and return to manual investigations, eliminating any efficiency gain.

The regulatory environment makes this worse. Security and compliance frameworks like SOC 2, ISO 27001, and industry-specific requirements increasingly demand audit trails for security decisions. When an AI SOC agent makes a response decision, compliance teams need documentation showing what evidence was evaluated, what decision criteria were applied, and why alternative explanations were ruled out. A black box agent can’t produce this documentation because the decision process isn’t structured for cyber investigation audit requirements. It’s optimized for prediction, not explanation.

Building the Glass Box: What True Autonomy Requires

The solution isn’t to abandon autonomous investigations; it’s to architect them for transparency from the ground up. This requires treating the investigation process itself as a first-class artifact that can be examined, validated, and refined. At Command Zero, what we’re building is a “glass box” approach: AI agents perform the investigation work, but every step of that work is visible, auditable, and modifiable.

Command Zero implements this through three core principles that transform autonomous cyber investigations from opaque automation into SOC AI trusted recommendations: Transparency, Pivotability, and Customization.

Transparency: Making Every Investigation Step Visible

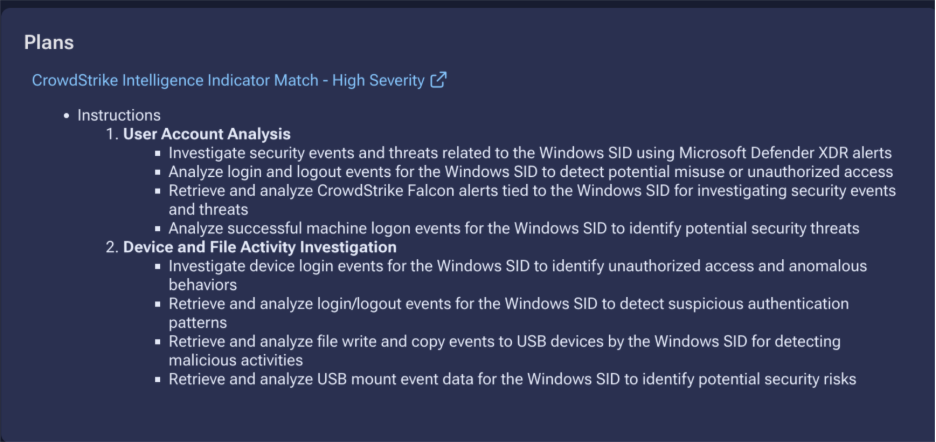

The first principle addresses the fundamental auditability problem. Before Command Zero executes a single query against your security data, the system presents the complete investigation plan in a human-readable format. This isn’t a high-level summary or a simplified workflow diagram. It’s the actual methodology the system will follow, showing every question that will be asked, every data source that will be queried, and every investigation facet that will be applied.

An analyst reviewing an alert can see exactly what the autonomous investigation will examine before it runs. They understand the evidence gathering strategy, the correlation logic, and the decision points that will determine the investigation outcome. This investigation plan becomes the primary artifact for cyber investigation audit purposes, complete documentation of the investigative methodology generated as part of the investigation itself, not reconstructed after the fact.

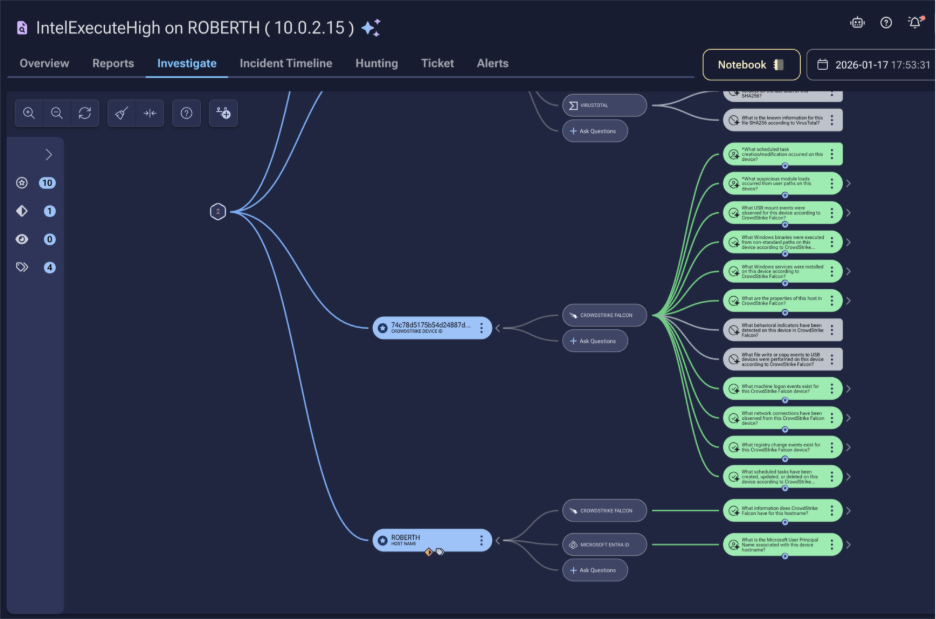

But transparency doesn’t stop at the planning stage. Command Zero’s Investigation tab provides a full visual flow of the investigation as it executes and after completion. This interface shows every question asked, every source queried, and every result returned in a chronological, navigable format. An analyst can trace the investigation from initial alert through every evidence gathering step to final conclusion.

This visual investigation flow functions as the stack trace for security investigations. Just as developers examining a software error can trace back through the exact sequence of function calls that led to the failure, security analysts can trace back through the exact sequence of investigative steps that led to a particular conclusion. Every data point is visible. Every correlation is explicit. Every decision is documented.

The value for SOC AI explainability is immediate and practical. When leadership asks: “Why did the system classify this as malicious?”, you open the Investigation tab and walk them through the evidence sequence. When compliance reviewers ask: “Did the investigation check for false positive indicators?”, you point to the specific queries and results that addressed that question. When an analyst questions a conclusion, they can examine the investigation flow, identify exactly where they disagree with the assessment, and understand what additional evidence would change the outcome.

This level of transparency transforms autonomous investigations from systems you have to trust into systems you can verify. The distinction is critical. Trust requires accepting outcomes based on the system’s track record and vendor claims. Verification means examining the actual investigative work and validating the reasoning process. Only one of these approaches meets regulatory requirements. Only one provides the audit trail security operations actually need.

Pivotability: Takeover From Autonomous to Manual Investigation Without LossThe second principle acknowledges a fundamental reality about autonomous security investigations: no generic system will handle every scenario perfectly. Novel attack patterns, environmental specifics, unusual business contexts, and sophisticated threat actor techniques create situations where the standard investigation plan needs human expertise. Black box agents fail catastrophically here because they offer no middle ground. You either accept the agent’s conclusion or start a completely new manual investigation, losing all the work the autonomous system already completed.

Command Zero’s approach treats every autonomous investigation as a foundation for deeper analysis when needed. An analyst can examine the investigation flow, identify where additional context is required, and seamlessly transition from autonomous to manual investigation without losing any of the baseline work. They can ask follow-up questions, query additional data sources, expand the investigation scope, or explore alternative hypotheses - all while preserving the evidence and correlations the autonomous investigation already gathered.

This pivotability changes the operational dynamics of AI-augmented security operations. Instead of forcing a binary choice between “trust the AI completely” or “ignore it and do everything manually,” analysts can leverage the AI’s comprehensive baseline coverage and enhance it with human expertise where it matters. The autonomous investigation handles the time-consuming work of gathering evidence from dozens of sources, performing systematic correlations, and executing routine pattern matching. When analyst judgment is needed, interpreting ambiguous behavior, understanding organizational context, or exploring novel attack vectors. They take over from a position of comprehensive situational awareness rather than starting from scratch.

For tier-2 and 3 ready analysts, this means they can focus their expertise on the genuinely difficult analytical challenges rather than the mechanical work of data gathering and basic correlation. The autonomous investigation provides the evidence foundation. The analyst provides the interpretive expertise, environmental context, and adaptive thinking that no automated system can replicate. The result is investigations that combine machine speed and coverage with human insight and judgment.

This approach also limits the blast radius of imperfect autonomous investigations. When the AI gets something wrong, ie, misinterprets ambiguous behavior, misses environmental context, or applies logic that doesn’t fit the specific scenario, analysts catch it during their review of the investigation flow and correct course. You’re not making an all-or-nothing bet on whether the autonomous investigation is 100% correct. You’re gaining substantial speed and coverage while maintaining human oversight at critical decision points.

Customization: Adapting Investigation Logic to Your Environment

The third principle addresses the reality that every organization’s security environment is unique. Generic investigation plans optimized for broad applicability will miss environment-specific indicators, overlook relevant data sources unique to your architecture, and waste cycles on queries that aren’t relevant to your business. Black box agents handle this diversity poorly because their logic is essentially fixed. You might be able to adjust configuration parameters, but you can’t fundamentally adapt the investigative methodology to match your organizational reality.

Command Zero allows teams to refine and correct security AI at a granular level. Organizations can modify investigation plans to include custom questions tailored to their environment, add investigation facets that query their specific data sources, and encode institutional knowledge about what “normal” looks like in their architecture. When new integrations come online or organizational context changes, the investigation logic can evolve to incorporate that new information.

These customizations aren’t buried in configuration files or separated from the investigation execution. They become part of the transparent investigation plan and visible investigation flow. When an analyst views an investigation that used customized logic, they see exactly what organization-specific intelligence was applied. The custom questions appear alongside the generic baseline. The custom data sources are queried and displayed in the investigation flow. The institutional knowledge becomes part of the auditable investigation process.

This means an investigation plan for credential misuse can be adapted to include checks against your specific privileged account naming conventions, query your custom identity management systems that aren’t industry-standard products, and correlate against business processes and access patterns unique to your organization. The autonomous investigation becomes more accurate and more relevant because it’s investigating your actual environment, not a vendor’s generic assumption about what enterprise security architectures look like.

The strategic value extends beyond accuracy improvements. When experienced analysts identify gaps in the generic investigation approach (missing data sources, overlooked correlation patterns, environment-specific indicators) they can provide feedback and encode that knowledge directly into the investigation plans. This institutional knowledge capture transforms individual analyst expertise into organizational capability that scales across all similar future investigations. The junior analyst gets the benefit of the senior analyst’s experience automatically, embedded in the investigation methodology itself.

As your organization’s security posture evolves; new systems deployed, new threat intelligence incorporated, new detection logic implemented, the investigation plans can evolve in parallel. The customization capability means your autonomous investigations grow more sophisticated over time, adapting to your changing environment rather than remaining frozen at whatever generic baseline the vendor shipped.

The Stack Trace for Security Investigations

Think about how software debugging works. When an application throws an error, developers don’t just see “something went wrong”, they get a stack trace showing exactly what code path executed, what functions were called, what parameters were passed, and where the failure occurred. This transparency makes debuzging possible. You can trace from the error back through the execution history, identify the specific condition that caused the problem, and implement a fix that addresses the root cause rather than the symptom.

Security investigations need the same level of transparency. When an autonomous agent flags an alert as malicious, you need the equivalent of a stack trace: the complete investigation execution showing what evidence was examined, what conclusions were drawn at each step, what correlations were performed, and how the final determination was reached. Command Zero’s investigation flow provides exactly this: a complete, navigable record of the investigative process that can be examined, questioned, validated, and used as the foundation for deeper analysis.

This transparency enables a feedback loop that black box agents fundamentally can’t support. When an analyst identifies an issue with an autonomous investigation, a data source that should have been queried but wasn’t, an assumption that doesn’t hold in this specific environment, a false positive pattern that needs to be excluded. They can trace exactly where in the investigation logic the adjustment needs to be made. That correction can be encoded back into the investigation plan through customization, improving all future investigations of similar alerts. The system gets demonstrably smarter through explicit refinement, not just through opaque retraining on larger datasets.

Moving From Trust Exercises to Verified Autonomy

The security industry’s current approach to AI agents asks organizations to make trust decisions they can’t properly evaluate. Vendors demonstrate that their agents achieve X% accuracy on benchmark datasets, but operational reality introduces variables those benchmarks don’t capture. Environmental specifics, evolving attack patterns, organizational context, and the long tail of unusual scenarios create conditions where generic accuracy claims don’t translate to reliable outcomes in your specific SOC.

Command Zero’s glass box approach built on transparency, pivotability, and customization transforms this dynamic. Instead of asking “Do you trust our AI?”, the question becomes “Can you verify what the AI investigated?” The answer to that second question is provably yes, because the investigation process is completely visible in the investigation plan and investigation flow, analysts can seamlessly take over or enhance the investigation when needed, and the investigation logic can be refined to match your organizational reality.

Autonomy becomes something you can verify rather than something you have to accept on faith. The investigation plan shows you what will be examined before it runs. The investigation flow shows you what was actually examined and what the results were. The pivotability lets you enhance the investigation when human expertise is required. The customization lets you refine the logic based on your environment and your team’s knowledge. At every stage, the system provides the transparency needed for verification, audit, and continuous improvement.

This doesn’t eliminate the need for sophisticated AI capability. It directs that capability toward the problems where it provides maximum value. The autonomous investigation handles comprehensive evidence gathering across dozens of data sources, systematic correlation of temporally distributed indicators, and routine pattern matching against known attack signatures at machine speed and scale. Human expertise focuses on interpretation of ambiguous evidence, application of environmental context the system can’t know, and decision-making under uncertainty where judgment matters more than computational power. The result is faster, more thorough investigations that maintain the auditability and explainability security operations require while actually leveraging the speed and coverage advantages of AI automation.

The black box agent problem isn’t unsolvable, it’s an architectural choice. Building AI systems that optimize for transparency alongside accuracy, that enable rather than replace human expertise, and that can be refined based on organizational reality creates autonomous capabilities security organizations can actually deploy with confidence. That’s the difference between AI that replaces trust with verification and AI that demands trust without providing the means to verify.

True autonomy in security operations requires visibility, not just velocity. Glass boxes, not black boxes. Investigation processes that can be examined in complete detail, questioned at every decision point, enhanced when human expertise is needed, and refined based on organizational learning. When every step is transparent through the investigation plan and investigation flow, when analysts can seamlessly pivot from autonomous to manual investigation, and when the investigation logic can be customized for your environment, autonomous cyber investigations transform from a liability risk into an operational advantage that delivers both speed and accountability.